The AI Black BoxAre You Watching What's Leaving Your Network?

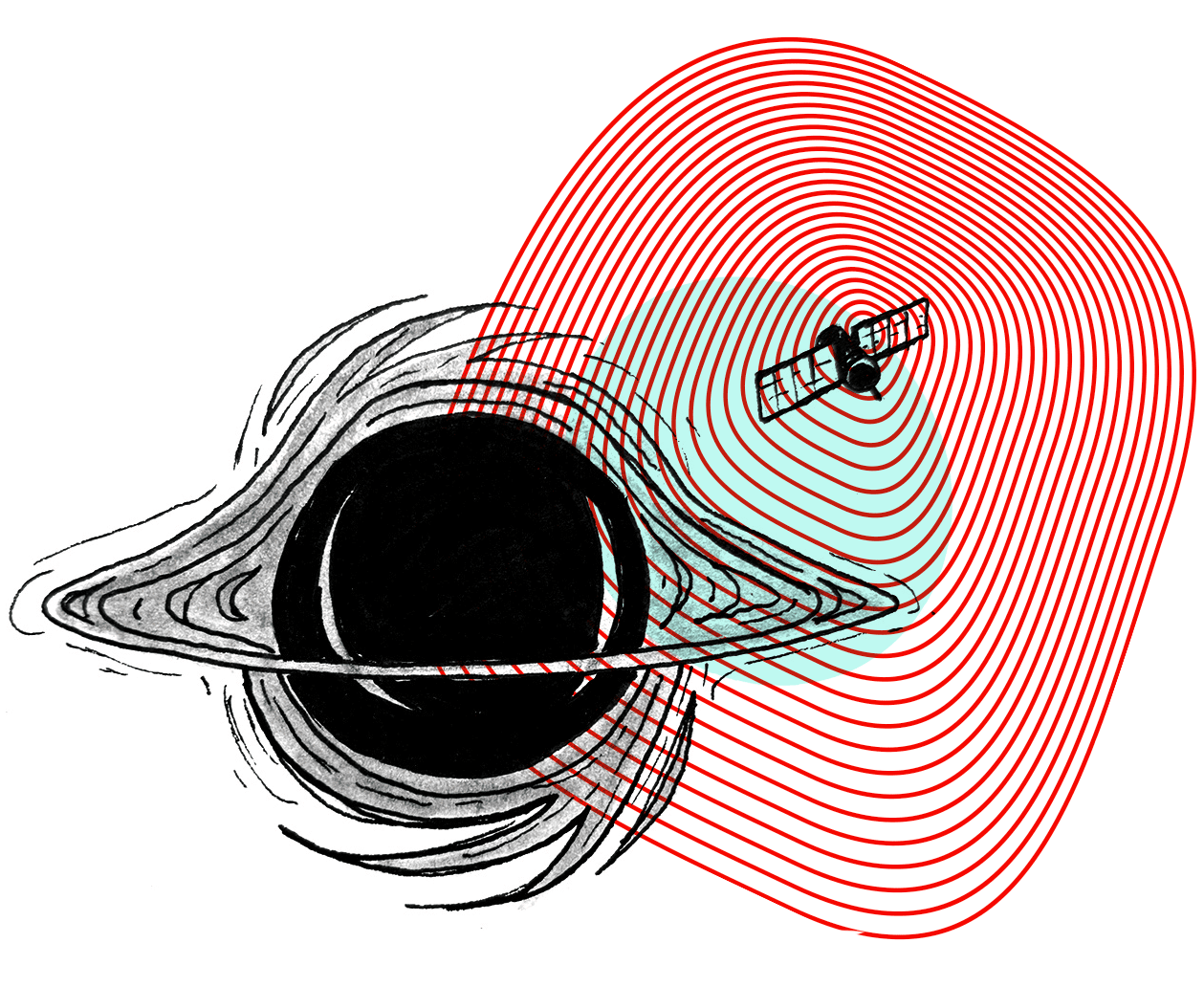

You're facing an unprecedented challenge. Business units, developers, and data science teams are in a sprint to integrate AI, embedding LLMs and AI agents into core applications. While the innovation is exciting, it has created a massive, encrypted blind spot: outbound API calls.

Every time an application on your servers calls an external third-party AI service, be it OpenAI, Anthropic, or any other vendor, it sends data over HTTPS. Because this traffic is encrypted, your traditional network monitoring tools are blind to the data leaving your environment.

This raises uncomfortable, high-stakes questions:

- Is proprietary source code being fed to an LLM to "debug" it?

- Is sensitive customer PII being included in prompts?

- What exactly is that new AI agent sending to its third-party SaaS integrations?

For a CISO, "I don't know" is not an acceptable answer. The risk of unintended data leakage, compliance violations, and shadow AI usage is enormous.

Why Capturing AI Transactions Is Harder Than It Looks

To solve the AI blind spot, organizations are trying a few different methods, each with serious trade-offs.

- Application-Level Logging (SDKs): The first approach is to rely on developers. You ask them to manually log every AI prompt and response, or you mandate a specific AI gateway or SDK. The problem? This is a compliance nightmare. It's an "opt-in" model that completely misses "Shadow AI." One developer using a raw

curlor a different library, and your visibility is gone. It's not a complete security solution; it's a development guideline that's impossible to enforce. - Inline Proxies (MITM Decryption): The second approach is the traditional network security model: inline proxies and man-in-the-middle decryption. As you know, this is often a non-starter for modern, high-throughput production environments. These methods add latency, create single points of failure, and are a massive operational burden, especially around certificate management.

Neither of these methods gives you what you actually need: a complete, independent, and non-disruptive way to see all AI traffic.

A New Approach: Visibility at the Source

This is where QTap comes in. QTap is a lightweight, open-source agent that sits on your hosts (VMs, Kubernetes nodes, servers). It uses a safe, modern kernel technology called eBPF to tap into your system at the source.

Here's the core concept:

- The "Magic": Instead of breaking TLS on the network, QTap intercepts data at the application layer by hooking into TLS library functions (like OpenSSL's

SSL_readandSSL_write). This allows it to see the full plaintext request and response before it's encrypted or after it's decrypted. - No Disruption: This is crucial. QTap operates out-of-band. It stealthily mirrors the data stream, adding virtually no latency and requiring no application code changes or certificate management. Your traffic flows completely uninterrupted.

This is where QTap's open-source nature gives you full flexibility. You can run it in a "connected" mode or in a complete offline mode, managed only by a local YAML file. You are not locked in. QTap can even output metrics to your existing tools like Prometheus or OpenTelemetry.

For those who want convenience and powerful, centralized analytics, QTap can be connected to QPlane, QPoint's optional cloud control plane. But the core power of data capture and control remains with the open-source QTap agent in your environment.

What This Unlocks for Security & Compliance

This approach directly solves your biggest AI governance challenges.

1. Eliminate the Data Leakage Blind Spot

You can finally see exactly what data is being sent from your servers to any external AI or SaaS API. You can configure QTap policies to capture full payloads for high-risk domains (like api.openai.com). Coupled with the QScan plugin, the system can automatically classify any outgoing payload that contains PII, credentials, or proprietary source code. This turns your data leakage risk from an unknown "black box" into a manageable, auditable data flow.

2. Manage Your "Invisible" SaaS Supply Chain

The challenge of AI also includes the AI agents integrating with your entire SaaS ecosystem (Slack, Jira, CRMs, etc.). QTap automatically discovers and inventories every external third-party service your application servers are contacting. When (not if) a third-party vendor reports a breach, your incident response is no longer a scramble. You can instantly see:

- If you were affected.

- Which services sent data to that vendor.

- Exactly what data was exposed.

This allows you to quantify your exposure in minutes, not weeks.

3. Automate Auditing & Prove Data Residency

For any CISO managing compliance (like GDPR or SOC 2), this is a game-changer. An auditor might ask, "Can you prove no EU customer data was processed by an AI service hosted in the US?". With QTap, you have an immutable, time-stamped audit trail of every data flow, complete with payload classifications and endpoint geolocation. You can definitively prove that regulated data never left its designated boundary.

4. Enable Safe AI Innovation

Most importantly, this technology allows you to move from being a "blocker" to being a "business enabler". Instead of banning new AI services out of fear, you can onboard them with a safety net of full visibility. You can give development teams the freedom to experiment, knowing you have a comprehensive log and real-time alerts to enforce safe usage guidelines.

Policy Examples (Concrete Use Cases)

This visibility allows you to move from passive monitoring to active, automated governance. You can create and enforce rules like:

- Alert on PII Leakage: Automatically alert when any connection from your production servers to an AI service contains PII classifications, credentials, or other sensitive data.

- Govern Shadow AI: Automatically capture full payloads for any new, unknown AI domain the first time it's seen. This creates an evidence package for you to review and approve the service or block it.

- Debug Agent Failures: Set a rule to capture full request and response payloads only for connections that result in a 4xx or 5xx error, giving developers instant context for debugging.

- Prove Data Residency: Log all connection metadata from your EU-based servers and alert if any of them establish a connection with a non-EU AI endpoint.

A Note on Your Data: A Security-First Architecture

Your first question is probably: "If this agent sees my plaintext data, where is it sending it?"

This is where QTap's architecture is critical. It is built on a "separation of data" principle.

- Sensitive Payloads, like the actual headers and bodies, NEVER go to the QPlane cloud. They are sent directly from the QTap agent to your own private object storage (e.g., your S3 bucket or Azure Blob).

- Anonymized Metadata, like IPs, ports, timings, and status codes, is the only thing sent to the QPlane cloud, and only if you choose to use it for centralized dashboards and alerts.

Sensitive content remains within your security boundary, under your control. This model preserves data sovereignty and gives you full control, whether you use QPlane or not.

Conclusion: Stop Guessing, Start Knowing

The rapid adoption of AI doesn't have to be a security nightmare. You can't secure what you can't see. By moving observability to the source, before encryption happens, you can finally close this critical visibility gap.

This approach allows you to manage risk, automate compliance, and, most importantly, confidently enable the business to innovate with AI, responsibly.