The Impossible Proxy - An Egress Journey

"We just need all external traffic to go through an egress proxy."

That's how it always starts. A seemingly simple security requirement that sounds reasonable in the boardroom. The CISO nods. The compliance team is satisfied. The architecture review board approves. And then, someone has to actually build it.

That someone is now you.

Month 1: The Research Phase

You start with a simple plan. First, understand the requirements:

- Security wants visibility into all outbound traffic

- Compliance needs an audit trail of external communications

- The risk team wants a single point of control for external access

It sounds straightforward on paper. You've done your reading – forward proxies, reverse proxies, transparent proxies. You've studied the RFCs. You understand the theory.

Your first step is logical: send out a company-wide survey asking teams to document their external dependencies. You create a simple template and distribute it with an executive sponsor's blessing.

Two weeks later, you have responses from 12 out of 70 teams.

The ones who did respond list a handful of obvious integrations: Stripe, Twilio, maybe a few public APIs. But something feels off. These are complex applications – surely they connect to more than three external services?

You create a project plan with three phases: discovery, planning, and implementation. You set a three-month timeline. Your manager approves the plan with a skeptical eyebrow.

Month 2: The Archaeology Expedition

With the survey approach clearly insufficient, you roll up your sleeves for some digital archaeology. Armed with a packet analyzer and stronger coffee, you spend weeks excavating the hidden artifacts of your infrastructure. Each layer reveals a new surprise:

- The payment service doesn't just call Stripe – it calls eight different endpoints across three regions

- Your logging libraries silently call home to check for updates

- The CI/CD pipeline connects to 14 different GitHub endpoints

- Your container images are pulling from five different registries

- Your NPM dependencies are fetching sub-dependencies from domains you've never heard of

By month's end, you've documented over 300 external endpoints across your infrastructure. The spreadsheet grows daily. You start to realize the scale of what you're up against.

Month 3: The Planning & First Implementation

You design your proxy architecture: a cluster of proxy servers, high availability, load balancing, failover. You write policies for common services. You build allowlists for known domains.

For the first implementation, you try the simplest approach. Configure the proxy and set the HTTPS_PROXY environment variable across the fleet. Push the change to development environments.

Within minutes, Slack lights up with reports of:

- Failed deployments

- Broken integration tests

- Development environments that won't start

- Libraries that can't update

The troubleshooting reveals that even with environment variables like HTTPS_PROXY set, many applications and libraries either ignore these settings or implement proxy support inconsistently.

You quickly revert and regroup. This is going to be harder than you thought.

Month 4: The Transparent Proxy Attempt

After the first failure, you decide on a more aggressive approach: a transparent proxy using SNI inspection. Since most modern TLS connections include the Server Name Indication (SNI) in the handshake, your proxy can see the destination hostname without breaking encryption.

The plan:

- Intercept all outbound traffic at the network level

- Inspect the SNI field during the TLS handshake

- Allow or block based on the hostname, without breaking encryption

- Forward allowed connections to their destination

Two weeks later, you're dealing with a different set of problems:

- Older TLS implementations don't use SNI

- Some libraries disable SNI for privacy reasons

- Multiple services behind a single hostname can't be distinguished

- You can see destinations but can't inspect content for data loss prevention

The biggest issue: you can only allow or block connections, but you can't monitor what's actually being sent. For your security team, this is insufficient.

Month 5-6: The Certificate Nightmare

You realize there's no avoiding it: to get the visibility needed, you'll have to break and inspect the encrypted traffic. This means implementing TLS interception:

- Generate a custom Certificate Authority (CA)

- Add this CA to every application's trust store

- Configure your proxy to sign certificates on-the-fly for each destination

- Intercept, decrypt, inspect, then re-encrypt all traffic

The certificate distribution alone becomes a massive project:

- Updating base images for containers

- Pushing certificate updates to virtual machines

- Training developers on how to include the CA in their applications

- Building verification systems to ensure certificates are properly installed

Meanwhile, you encounter a barrage of technical issues:

- Legacy applications with hardcoded certificate stores

- Certificate pinning in security-conscious libraries

- Modern browsers and libraries that reject your certificates due to security features

- Applications that use mutual TLS, where both client and server authenticate each other

A month after deployment, a critical integration with a financial partner starts failing intermittently. After days of debugging, you discover they're using certificate pinning – their client explicitly verifies the server's exact certificate, rejecting your proxy's substitute.

For each of these issues, you need custom solutions, exceptions, and workarounds. Your elegant proxy design is becoming a patchwork of special cases.

Month 7: The Authentication Maze

With certificates somewhat stabilized, new authentication problems surface:

- OAuth flows that break when redirects pass through the proxy

- API keys embedded in requests that now get logged by your proxy

- JWT tokens with audience validation that fails through proxies

- Services that validate client IP addresses, which get masked by the proxy

Each authentication method requires special handling. Your configuration grows to thousands of lines, with special cases for dozens of services.

Meanwhile, the security team is breathing down your neck. They want full visibility, but every exception you create is a potential blind spot.

Even with authentication issues partially resolved, new problems emerge. Your monitoring shows increased latency and occasional timeouts. The proxy has become a bottleneck:

- Each connection requires two TLS handshakes instead of one

- CPU usage spikes as proxies handle encryption/decryption for all traffic

- Connection pooling becomes ineffective with thousands of destinations

- Memory usage grows with each concurrent connection

You scale up the proxy infrastructure, but costs spiral quickly. What started as a simple security project now consumes significant infrastructure budget.

The worst part? High-frequency trading APIs and real-time services report that even milliseconds of added latency impact their functionality.

Month 9: The Container Uprising

Just as you think you're making progress, containerization introduces new challenges:

- Sidecar containers making their own external calls without proxy awareness

- Init containers pulling from external registries during startup

- Service meshes with their own TLS termination that conflicts with your proxy

- Dynamic scaling that causes connection pools to fluctuate wildly

Each container seems to have its own ideas about how to communicate with the outside world, and none of them involve your carefully crafted proxy setup.

Month 10: The Retrospection

Ten months in, you call a timeout. In a candid presentation to leadership, you outline the fundamental challenges:

- Incomplete Discovery: No team knows all their external dependencies

- TLS Complexity: Breaking encryption creates more problems than it solves

- Certificate Management: Distribution and updating is a massive undertaking

- Authentication Diversity: Different services use incompatible authentication mechanisms

- Scale Issues: At microservice scale, centralized proxies become bottlenecks

- Performance Impact: Added latency affects critical business functions

- Container Complexity: Modern deployment models resist centralized control

You realize you've been trying to solve a distributed problem with a centralized solution. The traditional proxy model simply doesn't work at microservice scale.

The Breakthrough

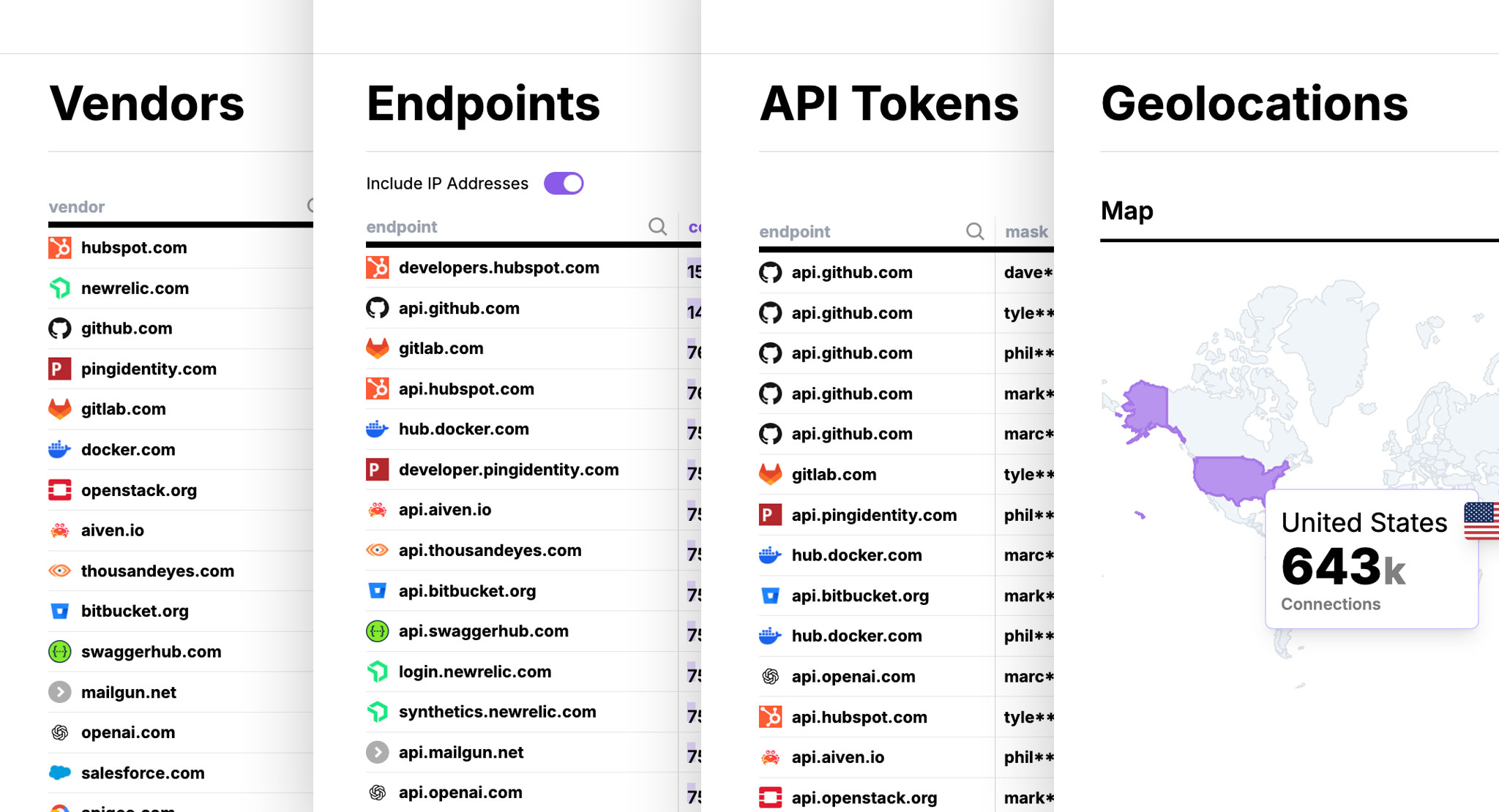

The turning point comes when you discover Qpoint and shift your thinking from network-level interception to kernel-based visibility:

- Host-Based Approach: Qpoint deploys lightweight agents that see traffic before encryption happens at the source

- Process Attribution: Identify exactly which processes, containers, or services are initiating connections

- Pre-Encryption Visibility: Access the content of external calls without breaking TLS or managing certificates

- Real-Time Discovery: Automatically catalog all external endpoints your applications actually use in production

- Non-Invasive Implementation: Deploy without changing application code or network architecture

Instead of a monolithic proxy breaking and rebuilding all TLS connections, Qpoint provides a distributed system of visibility and control – one that works with your architecture rather than against it.

The difference is transformative:

- Complete Visibility: See all external connections, including those from libraries and dependencies

- Zero Certificate Hassles: No need to distribute custom CAs or deal with certificate pinning issues

- Performance Preservation: No added latency since traffic isn't routed through a centralized bottleneck

- Granular Control: Apply policies based on process identity, not just destination

- Actual Payloads: Capture the real content of API calls when needed for debugging or security

- Deployment Simplicity: Roll out incrementally without disrupting existing services

The Reality Check

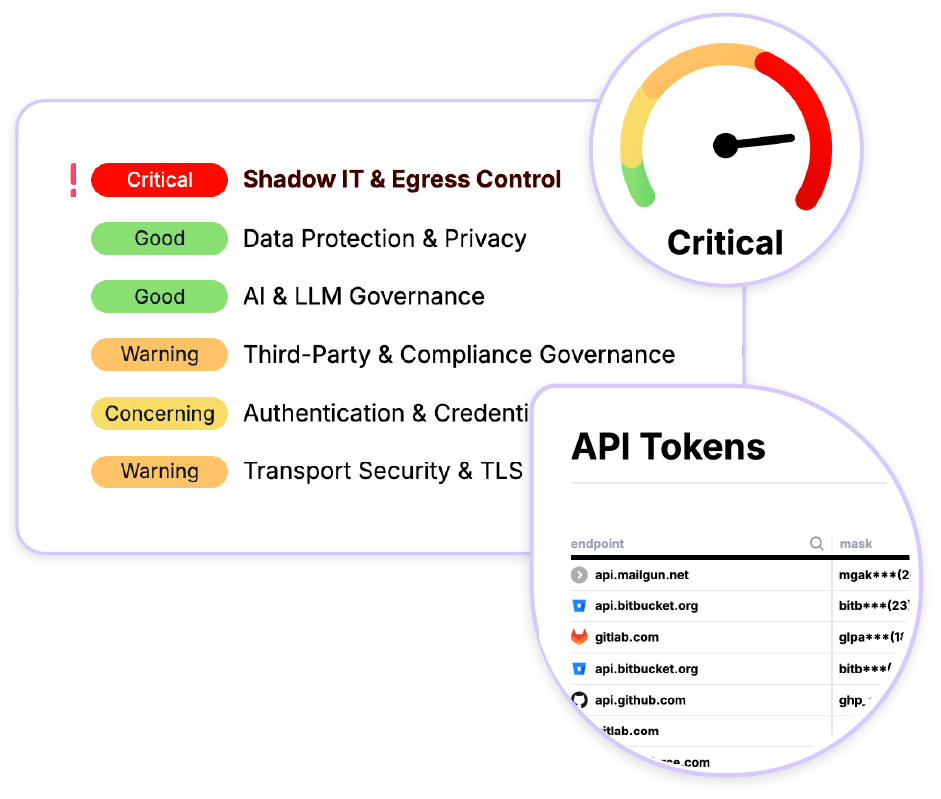

The truth about implementing egress proxies in complex microservice environments isn't about finding the perfect technical solution. It's about recognizing that:

- TLS Wasn't Designed for Interception: The security properties of TLS deliberately make intermediaries difficult

- Complete Control Is an Illusion: In highly distributed systems, you need governance, not absolute control

- Context Matters More Than Barriers: Understanding what's happening is more valuable than blindly blocking traffic

- Security Must Scale: Security controls must be as distributed as the systems they protect

- Visibility Precedes Control: You can't effectively control what you can't see

The journey teaches you something profound: in modern architectures, the context around connections – why they exist, what data they carry, which processes initiate them – matters more than simply routing them through a checkpoint.

Rather than a single proxy to rule them all, what organizations truly need is a control plane for external connections – one that provides visibility first, then enables intelligent, context-aware controls that work with the grain of your infrastructure, not against it.

With Qpoint, you finally get the missing context – comprehensive visibility into your external services without the architectural compromises that made traditional proxies so problematic in modern environments. By tapping into connections at their source using eBPF technology, Qpoint brings your external services into focus, exactly where traditional tools have left you blind.

Because when you're dealing with thousands of microservices, each with its own external dependencies, the impossible proxy doesn't have to be a proxy at all.

Ready to see what you've been missing? Schedule a demo with a Qpoint solution engineer and discover what's actually happening in your infrastructure.

For more insights on security, compliance, and network visibility, explore our blog for the latest thought leadership from the Qpoint team.